Installing Gloo Gateway on HashiCorp Nomad

Gloo Gateway can be used as an Ingress/Gateway for the Nomad platform. This guide walks through the process of installing Gloo Gateway on Nomad, using Consul for service discovery/configuration and Vault for secret storage.

HashiCorp Nomad is a popular workload scheduler that can be used in place of, or in combination with Kubernetes as a way of running long-lived processes on a cluster of hosts. Nomad supports native integration with Consul and Vault, making configuration, service discovery, and credential management easy for application developers.

You can see a demonstration of Gloo Gateway using Consul, Nomad, and Vault in this YouTube video.

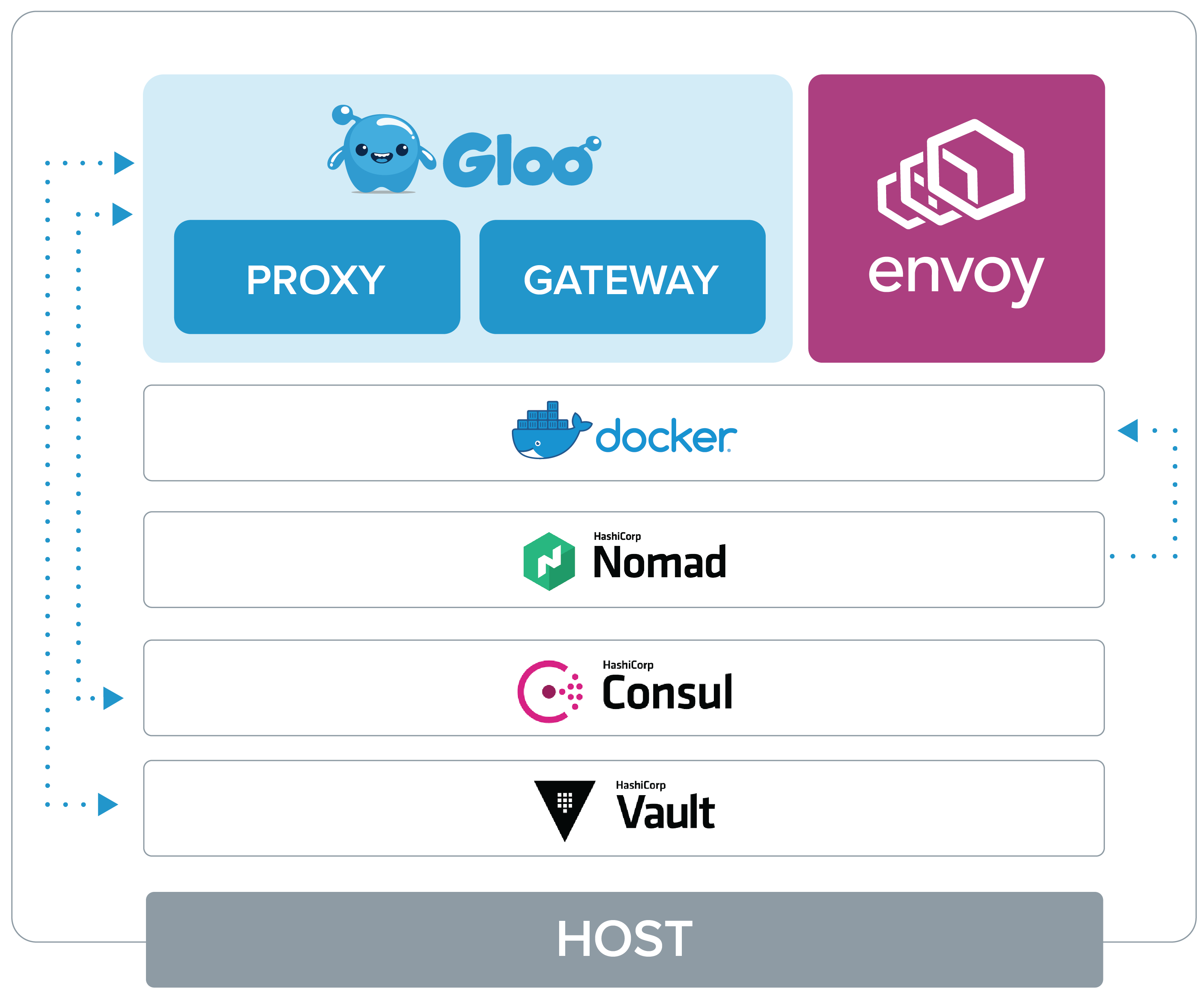

Architecture

Gloo Gateway on Nomad uses multiple pieces of software for deployment and functionality.

- Docker: The components of Gloo Gateway are deployed as containers running discovery, proxy, envoy, and the gateway

- Nomad: Nomad is responsible for scheduling, creating, and maintaining containers hosting the Gloo Gateway components

- Consul: Consul is used to store key/value pairs that represent the configuration of Gloo Gateway

- Vault: Vault is used to house sensitive data used by Gloo Gateway

- Levant: Levant is a template and deployment tool for Nomad jobs allowing the use of a variable file with the Nomad job for Gloo Gateway deployment

- Glooctl: Command line tool for installing and configuring Gloo Gateway

Preparing for Installation

Before proceeding to the installation, you will need to complete some prerequisites.

Prerequisite Software

Installation on Nomad requires the following:

- Levant installed on your local machine

- Docker, Consul, Vault, and Nomad installed on the target host machine (which can also be your local machine). A Vagrantfile is provided that includes everything needed to run Nomad.

If you want to run locally on macOS, you will also need to install Weave Network.

Download the Installation Files

This tutorial uses files stored on the Gloo Gateway GitHub repository.

In order to install Gloo Gateway on Nomad, you’ll want to clone the repository:

git clone https://github.com/solo-io/gloo

cd gloo/install/nomad

The files used for installation live in the install/nomad directory.

├── demo-local.sh

├── demo-vagrant.sh

├── gloo-policy.hcl

├── jobs

│ ├── gloo.nomad

│ └── petstore.nomad

├── launch-consul-vault-nomad-dev.sh

├── README.md

├── Vagrantfile

The Gloo Gateway Nomad Job and the Pet Store job are in the jobs directory.

The gloo.nomad job is experimental and designed to be used with a specific Vault + Consul + Nomad setup.

The Levant Variables for the Gloo Gateway Nomad Job are generated by the ./demo-{local,vagrant}.sh script into a ./variables.yaml file.

Deploying Gloo Gateway with Nomad

The scripts and files included in the Gloo Gateway repository provide the option to run all the components on your local machine or use Vagrant to provision a Virtual Machine with all the components running. There are three deployment options:

- Local

- Vagrant

The complete demo will stand up the entire environment including Gloo Gateway and the routing configuration. The other two options provide a more hands-on experience, where you walk through the steps of installing Gloo Gateway and the Pet Store application, and configuring the routing.

Run the complete Demo

If your environment is set up with Docker, Nomad, Consul, Vault, and Levant, you can simply run demo-local.sh to create a local demo of Gloo Gateway routing to the PetStore application. The script will spin up dev instances of Consult, Nomad, and Vault. Then it will use Nomad to deploy Gloo Gateway and the Pet Store application. Finally, it will create a route on Gloo Gateway to the Pet Store application.

./demo-local.sh

After the script completes its setup process, you can test out the routing rule on Gloo Gateway by running the following command.

curl <nomad-host>:8080/

If running on macOS or with Vagrant:

curl localhost:8080/

If running on Linux, use the Host IP on the docker0 interface:

curl 172.17.0.1:8080/

The value returned should be:

[{"id":1,"name":"Dog","status":"available"},{"id":2,"name":"Cat","status":"pending"}]

Running Nomad locally

If you’ve installed Nomad/Consul/Vault locally, you can use launch-consul-vault-nomad-dev.sh to run them on your local system.

If running locally (without Vagrant) on macOS, you will need to install the Weave Network.

weave launch

If running locally on Linux, you’ll need to disable SELinux in order to run the demo (or add permission for docker containers to access / on their filesystem):

sudo setenforce 0

Then run the launch-consul-vault-nomad-dev.sh script.

./launch-consul-vault-nomad-dev.sh

The script will launch a dev instance of Consul, Vault, and Nomad and then continue to monitor the status of those services in debug mode. It also loads two key/value pairs into Consul for the default gateway-proxy and gateway-proxy-ssl configurations.

You can stop all of the services by hitting Ctrl-C.

Once you have finished launching these services, you are now ready to install Gloo Gateway.

Running Nomad Using Vagrant

The provided Vagrantfile will run Nomad, Consul, and Vault inside a VM on your local machine.

First download and install HashiCorp Vagrant.

Then run the following command:

vagrant up

Ports will be forwarded to your local system, allowing you to access services on the following ports (on localhost):

| service | port |

|---|---|

| nomad | 4646 |

| consul | 8500 |

| vault | 8200 |

| gloo/http | 8080 |

| gloo/https | 8443 |

| gloo/admin | 19000 |

Once you have finished launching these services, you are now ready to install Gloo Gateway.

Install Gloo Gateway

Once you have a base environment set up with Consul, Vault, and Nomad running, you are ready to deploy the Nomad job that creates the necessary containers to run Gloo Gateway.

The assumption is that you are running Consul, Nomad, and Vault either locally or remotely.

If you are running these services remotely, you will need to update the address and consul-address values with your configuration. The default port for Nomad is 4646 and for Consul is 8500. Make sure to give the full address to your Nomad and Consul servers, e.g. https://my.consul.local:8500.

levant deploy \

-var-file variables.yaml \

-address http://<nomad-host>:<nomad-port> \

-consul-address http://<consul-host>:<consul-port> \

jobs/gloo.nomad

If running locally or with vagrant, you can omit the address flags from the deployment command:

levant deploy \

-var-file variables.yaml \

jobs/gloo.nomad

You can monitor the status of the deployment job by checking the UI or by executing the following command:

nomad job status gloo

When the deployment is complete, you are ready to deploy the Pet Store application to demonstrate Gloo Gateway’s capabilities.

Deploying a Sample Application

In this step we will deploy a sample application to demonstrate the capabilities of Gloo Gateway on your system. We’re going to deploy the Pet Store application to Nomad using Levant.

If you are running these services remotely, then you will need to update the address and consul-address values with your configuration. The default port for Nomad is 4646 and for Consul is 8500. Make sure to give the full address to your Nomad and Consul servers, e.g. https://my.consul.local:8500.

levant deploy \

-var-file='variables.yaml' \

-address <nomad-host>:<nomad-port> \

-consul-address <consul-host>:<consul-port> \

jobs/petstore.nomad

If running locally or with vagrant, you can omit the address flags from the deployment command:

levant deploy \

-var-file='variables.yaml' \

jobs/petstore.nomad

You can monitor the status of the deployment job by executing the following command:

nomad job status petstore

When the deployment is complete, you are ready to create a route for the Pet Store application.

Create a Route to the PetStore

We can now use glooctl to create a route to the Pet Store app we just deployed:

glooctl add route \

--path-prefix / \

--dest-name petstore \

--prefix-rewrite /api/pets \

--use-consul

{"level":"info","ts":"2019-08-22T17:15:24.117-0400","caller":"selectionutils/virtual_service.go:100","msg":"Created new default virtual service","virtualService":"virtual_host:<domains:\"*\" > status:<> metadata:<name:\"default\" namespace:\"gloo-system\" > "}

+-----------------+--------------+---------+------+---------+-----------------+--------------------------------+

| VIRTUAL SERVICE | DISPLAY NAME | DOMAINS | SSL | STATUS | LISTENERPLUGINS | ROUTES |

+-----------------+--------------+---------+------+---------+-----------------+--------------------------------+

| default | | * | none | Pending | | / -> gloo-system.petstore |

| | | | | | | (upstream) |

+-----------------+--------------+---------+------+---------+-----------------+--------------------------------+

The

--use-consulflag tells glooctl to write configuration to Consul Key-Value storage

The route will send traffic from the root of the gateway to the prefix /api/pets on the Pet Store application. You can test that by using curl against the Gateway Proxy URL:

curl <nomad-host>:8080/

If running on macOS or with Vagrant:

curl localhost:8080/

If running on Linux, use the Host IP on the docker0 interface:

curl 172.17.0.1:8080/

Curl will return the following JSON payload from the Pet Store application.

[{"id":1,"name":"Dog","status":"available"},{"id":2,"name":"Cat","status":"pending"}]

Next Steps

Congratulations! You’ve successfully deployed Gloo Gateway to Nomad and created your first route. Now let’s delve deeper into the world of Traffic Management with Gloo Gateway.

Most of the existing tutorials for Gloo Gateway use Kubernetes as the underlying resource, but they can also use Nomad. Remember that all glooctl commands should be used with the --use-consul flag, and deployments will need to be orchestrated through Nomad instead of Kubernetes.